Data on arm motion control for the human-machine interface

A new “multi-modal” data set on arm motion control, published recently in GigaScience, is an important contribution to develop robotic prosthetic devices and other tools at the interface between human and machine.

Robotic arms and other devices that support limb movements are getting ever more sophisticated. New materials and new computational approaches are paving the path for prosthetic devices that “blend in” with the human organism. However, to navigate the interface between human and machine, data is needed on all aspects of arm motion control, both in healthy and disease conditions.

Developers of robotic arms and other human-machine interfaces that support limb movements need quantitative data that describe how human limbs operate during everyday tasks, such as lifting a cup of tea to your lips or turning a page in a book.

One of the biggest datasets on human motor control available

Although these tasks look trivial from the outside, the underlying kinematics and involvement of diverse muscle groups is a complex affair; even more if interaction with objects – an apple, a cup, a page of a book – is involved. To really understand what is going on when we reach for an apple and take a bite, for example, researchers need quantitative information on arm movements and corresponding activity patterns of muscles. They also need data from brain activity and other physiological parameters connected to arm motion control.

A collaboration coordinated by Istituto Italiano di Tecnologia, with the participation of the University of Pisa as well as of other scientists across Europe, has now published one of the biggest datasets on human motor control available, a “multi-modal” data collection of upper limb movements, both in healthy and post-stroke conditions (read the GigaScience data note here). Corresponding author Guiseppe Averta says,

“It represents a previously unmatched source of information for the research community, because it will enable a deeper analysis not only of how the motor control works looking at a specific functional domains, but also on the relationship between them.“

The researchers met under the roof of “SoftPro”, an initiative with the goal to “study and design soft synergy-based robotics technologies to develop new prostheses, exoskeletons, and assistive devices for upper limb rehabilitation”. Averta says the aim is not only to develop new technology and better performing devices, but also to contribute to making the technology accessible to real people with real needs:

Technology for real people with real needs

“It is the result of a strong integration and coordination effort. U-Limb is now publicly available and published in the open access journal GigaScience, coherently with the open approach to innovation that characterized the Project.”

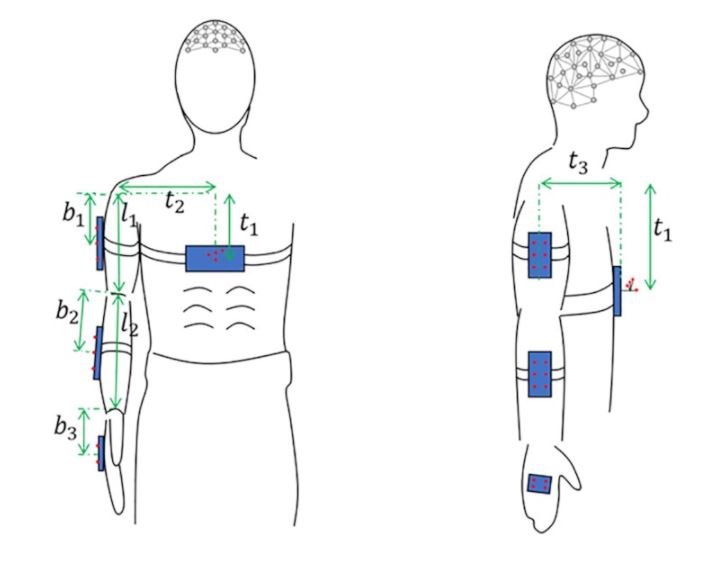

To go a bit into the details, the newly published article is based on 91 able-bodied and 65 post-stroke participants. The data combines tracking of movements (kinematics) with data on muscle activity, heart rate and brain function. This integration of different data sources is one of the great strengths of the project, Averta explains:

“It will be possible to study how certain cortical patterns reflect in muscular activation, and how this influences the coordinate movement to execute a certain task.”

To achieve such a multi-modal data collection, the researchers used three protocols:

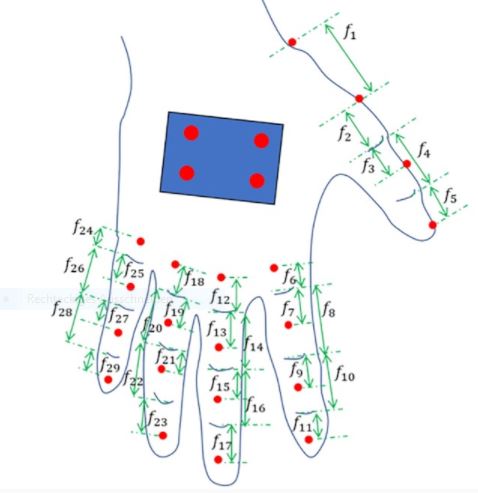

1) Participants performed a range of daily activities, such as pointing at something, grasping the handle of a suitcase, or removing a tea bag from a cup. During these tasks, limb movements were recorded on camera, based on defined marker positions, while muscle and brain activity as well as heart rate were measured as well.

2) Hand grasping was also studied in fMRI experiments.

3) In addition, a device called VPIT (“virtual peg insertion test”) was used to collect data on the coordination of arm and hand.

To see how the VPIT works, watch this video from ETH RELAB:

For some of the experiments, a subgroup of the participants wore a “full body sensor suit”, equipped with gyroscopes and accelerometers.

The full data is openly available via the Harvard dataverse repository.

Use cases for humans and robots

What are potential use cases for this new data? Averta explains more:

“For example, a study of the coordination across joints could be used to inform the design of under-actuated prostheses that can replace missing limbs with a limited number of motors. On the other side, we can exploit the knowledge of natural behavior to provide a comparison with stroke patients, helping clinicians in assessing the pathology’s severity.”

There may even be applications in industrial robotics, for example in robotic approaches where machines are trained to replicate human behavior:

“This would be an asset to develop manipulators, and in general humanoid robots, that behave as a human would actually do in the same situation.”

Read the GigaScience Data Note:

Averta G, Barontini F, Catrambone V, Haddadin S, Handjaras G, Held JPO, Hu T, Jakubowitz E, Kanzler CM, Kühn J, Lambercy O, Leo A, Obermeier A, Ricciardi E, Schwarz A, Valenza G, Bicchi A, Bianchi M. U-Limb: A multi-modal, multi-center database on arm motion control in healthy and post-stroke conditions. Gigascience. 2021 Jun 18;10(6):giab043. doi: 10.1093/gigascience/giab043.

Data available here:

Averta G, Barontini F, Catrambone V, Haddadin S, Handjaras G, Held JPO, Hu T, Jakubowitz E, Kanzler CM, Kühn J, Lambercy O, Leo A, Obermeier A, Ricciardi E, Schwarz A, Valenza G, Bicchi A, Bianchi M. U-Limb: A multi-modal, multi-center database on arm motion control in healthy and post-stroke conditions. Harvard Dataverse, V3. 2020, https://doi.org/10.7910/DVN/FU3QZ9