The Utility and Danger of AI in Scientific Publishing: Riddles of the Sphinx – GigaScience at WCRI 2024

The 8th World Congress on Research Integrity (WCRI) took place in Athens, Greece from 1st-5th June. GigaScience Press are regular attendees of this conference, and this year our organisation was represented by GigaScience Editor-in-Chief Scott Edmunds, Executive Editor Nicole Nogoy, and Data Scientist Chris Armit. A major theme of the conference was the double-edged sword that is the use of AI in Scientific Publishing and its effect on research integrity. The topic was of huge interest this year, as attested by the record 800 registrants, packed conference rooms, and many satellite workshops on image screening and paper mills. We report below on some of the major highlights from the conference.

Photographer: Chris Armit

Research Integrity: Analogies with Ancient Athens

The conference organisers are to be commended for their bold choice in inviting the theologian Professor Jan Helge Solbakk (University of Oslo) to deliver a keynote talk at the start of the Congress entitled “The case of Oedipus: Excellence vs Integrity in Research”. Professor Solbakk observes analogies between the tragic hero Oedipus, and the contemporary scientific researcher. Indeed, as Professor Solbakk explained, two plays by the great Athenian playwright Sophocles – ‘Oedipus the King’ and ‘Oedipus at Colonus’ – could be used to “dramatise the rise, fall and rehabilitation of an eminent researcher”. As Professor Solbakk explained, “research is at the same time a comic and a tragic enterprise”.

To illustrate this point, Professor Solbakk recalls the tale of the young Oedipus, a migrant from Corinth, who, at the gates of Thebes, is asked a riddle by the Sphinx.

“What walks on four legs in the morning, two at midday, and three in the evening?”

The Sphinx asks this question to each and every person who wishes to enter the city of Thebes, and those who fail to answer the riddle are eaten by the Sphinx. Oedipus answers the question with, “Man”, and in doing so solves the riddle of the Sphinx. Mankind crawls on four limbs as the infant baby, walks upright on two limbs as the child, adolescent, and adult, and requires a walking stick for stability in advanced age. The morning, midday, and evening of the riddle were but metaphors for a human lifetime. The Sphinx cannot bear that her riddle has been solved by a human, and plunges herself to death.

Professor Solbakk sees in this ancient tale an archetype of how society observes the successful scientist. This is the scientist as problem-solver, the scientist as hero.

Jan Helge Solbakk compares the dilemma of the modern scientist to plays by the Greek tragedian Sophocles.

But Oedipus was also a comic figure. On becoming King, Oedipus has an overinflated self-belief in his ability to solve problems, a position which leads him to question and lampoon the blind seer Tiresias into disclosing to him the identity of the murderer of King Laius. This is dangerous knowledge. Oedipus was the murderer of King Laius, and Oedipus now understands that he killed his own father and married his mother. As Professor Solbakk explained, with this knowledge “Oedipus the comic figure is transformed into a tragic hero”.

As Professor Solbakk further explained: “While insisting he had done everything to avoid killing his father and mating his mother, he at the same time insists: I am innocent and at the same time I am responsible”.

Professor Solbakk is swift to point out parallels between the responsible researcher and the tragic hero. Indeed, as Professor Solbakk explained: “This is a paradigmatic example of what integrity should entail; acceptance, even, of inevitable forms of failure and misconduct…forms of error or mistake where self-blame, but not blame from others, is warranted”.

The GigaScience Press team considered this a highly thought-provoking and fascinating talk, which evokes the sense that our quest for research integrity, which we thought of as a recent venture, is in essence timeless.

Photographer: Chris Armit

The Trouble with Generative AI

A major theme of the conference was the use of Generative AI in scientific publishing, and what we should do about it. It is important to note that not all AI is generative, and that computational biologists utilise clustering, classification, and prediction on a near-daily basis as a significant element in their AI research toolkit.

As an Open Science publisher and an advocate of transparency in publishing, at GigaScience Press we have recently updated our Editorial policies to allow authors to utilise AI tools and technologies in paper writing with the specification that the authors check and take responsibility for the AI’s work (see the COPE position statement on this). These policies were heavily influenced by the recommendations of Mohammad Hosseini et al. on the ethics of disclosing the use of artificial intelligence tools in writing scholarly manuscripts, and it was great to see Mohammad present on this topic at the meeting. For more on this see our recent GigaBlog “Transparency FTW! LLMs, OpenBoxScience and GetFreeCopy”.

Many talks at the conference seemed to endorse this approach, both in publishing papers and grant writing, with the talk by Dr Sonja Ochsenfled-Repp (German Research Foundation) on “The impact of generative models for text and image creation on good research practice and on research funding” being particularly informative in this regard.

As Dr Ochsenfled-Repp explained in her talk, an Initial Statement by the Executive Committee of the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) on the Influence of Generative Models of Text and Image Creation on Science and the Humanities and on the DFG’s Funding Activities specifies the following:

- When making their results publicly available, researchers should, in the spirit of research integrity, disclose whether or not they have used generative models, and if so, which ones, for what purpose and to what extent.

- In decision-making processes, the use of generative models in/for proposals submitted to the DFG is currently assessed to be neither positive nor negative.

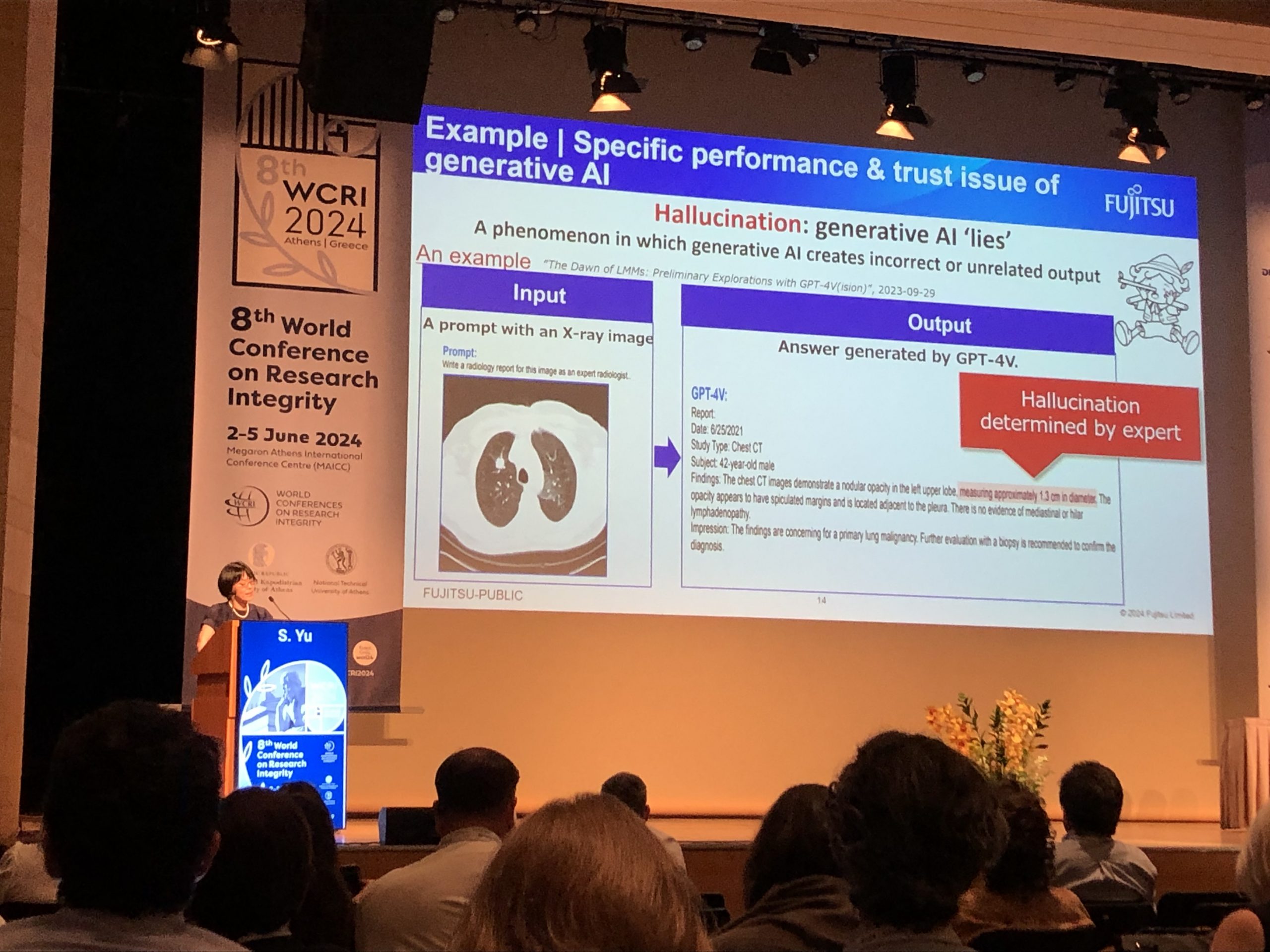

The Plenary session entitled, “Perspectives on Research Integrity and Generative Artificial Intelligence” was particularly insightful in highlighting some of the concerns with AI. As Dr Shanshan Yu (Fujitsu), in her talk entitled “Perspective from Industry” explained, “Generative AI…(is) an artificial intelligence technology that uses machine learning algorithms to generate contents”.

Shanshan argues that Generative AI technology “can be used to promote creativity, fidelity, and usability”.

However, one of the main drawbacks of Generative AI is its ability to generate what Shanshan refers to as “hallucinations”, which are “Generative AI lies”. As Shanshan explained, hallucination is “a phenomenon in which Generative AI creates incorrect or unrelated output”.

Shanshan Yu details the problem with Generative AI.

For what is now an infamous example of Generative AI hallucinations, one that was certainly doing the rounds at this year’s WCRI, we refer you to an article by the Science Integrity Digest. However, some hallucinations may be more difficult to spot, and may require domain knowledge and expert advice. The latter represents a significant challenge for the scientific publishing community.

Towards this end, Professor Karin Verspoor (RMIT University, Melbourne), in her talk entitled “Impacts of GenAI on Research: The Good, the Bad, and the Ugly”, explained that “GenAI will contribute to…generating images that communicate a concept rather than reflect reality”. Indeed, Professor Verspoor summed up the role of Generative AI as, “easy generation of text paraphrases, easy generation of fabricated images, easy generation of fabricated data/tables, generation of text describing data, instant literature reviews”.

This is immensely worrying from a scientific publishing and research integrity, and there is a clear need for tools that identify fake papers and fake data. However, Professor Verspoor additionally highlighted a potential role of AI in fraud detection, and believes that GenAI will contribute to combating fraud through “image duplication detectors, paraphrase / text similarity measurement, and inappropriate citation classification”.

It is important to note that there are image duplication detection tools – such as Imagetwin – the utility of which are currently being explored by key scientific journals such as eLife. These tools compare images in a submitted manuscript against a database of images from previously published scientific publications, and their utility is that they are able to identify reuse of images. However, there is a salient need for computational tools to identify GenAI fabricated images in scientific publications.

We look forward to GenAI-based tools that will assist the scientific publishing community in fraud detection.

Paper Mills and How to Spot Them

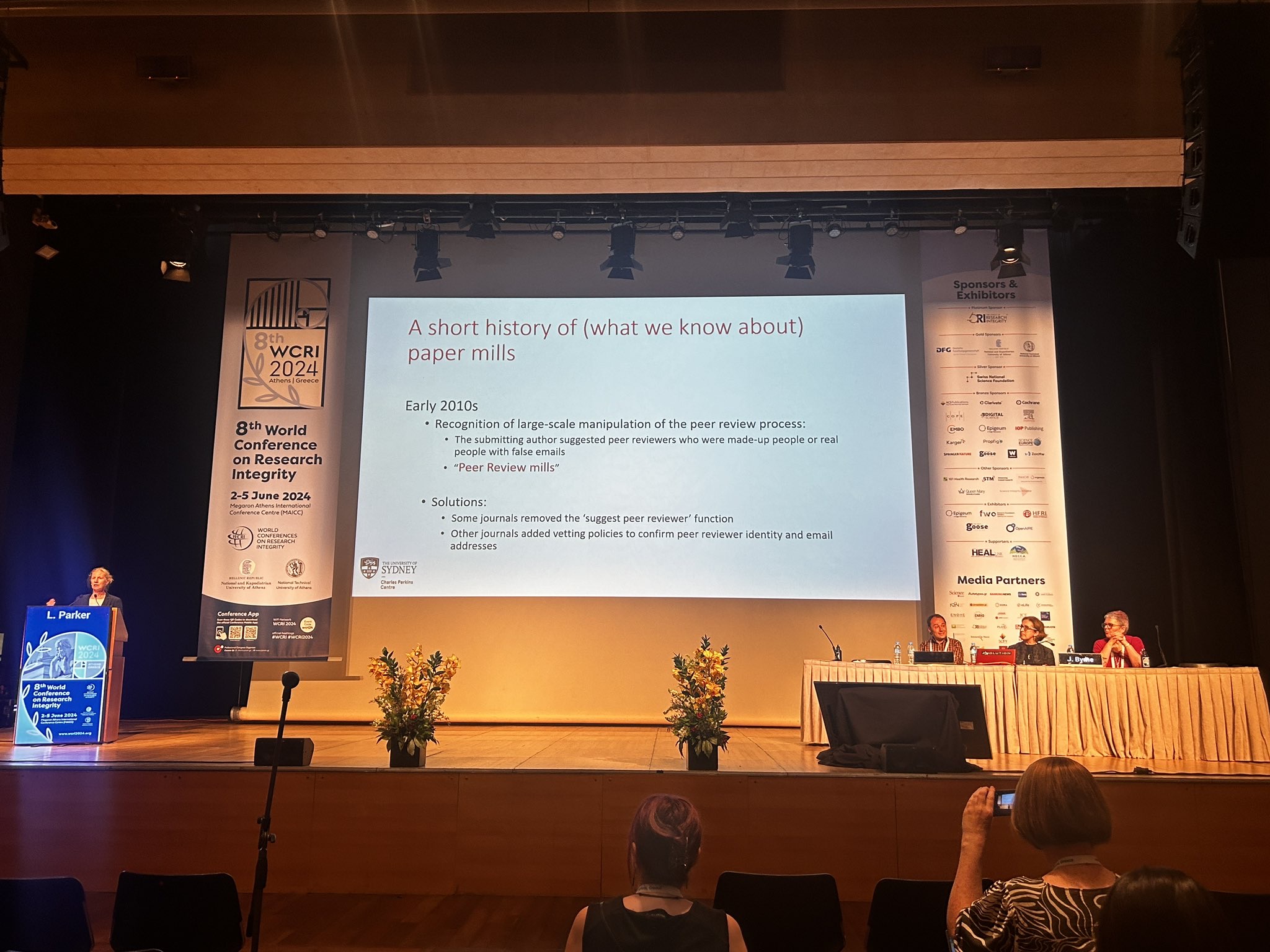

The Workshop on paper mills and also the Plenary session “Addressing the challenge of paper mills through research and policy”, both chaired by Professor Jennifer Byrne (University of Sydney), were very timely and well suited to the focus on AI in this year’s WCRI. This is a topic we have tried to pre-empt and defend ourselves with by our stringent open science policies (particularly for data and transparency), as well as by trying to tackle the misguided authorship incentive systems that have lead to these practices. And in the past we have even identified paper mills operating in our Hong Kong base. The talk by Dr Lisa Parker, entitled “The Paper Mill challenge” provided a very helpful overview of the situation. Paper mills were first noticed in the early 2010s when Editors started noticing large-scale manipulation of the peer-review process. As Lisa explained, “the submitting author suggested peer reviewers who were made up people or real people with false emails”. These became known as “peer review mills”.

In 2024, Editor bribery activity was uncovered, with Lisa highlighting in her talk, “Journal Editors being offered cash to publish paper mill papers, to increase likelihood of paper being published”.

Lisa was swift to point out that paper mills should be considered an “organised crime syndicate” that often target a “busy journal with high-volume submissions”. As Lisa explained, the Editor sends the paper for peer review using the suggested, yet bogus, reviewers. Following peer review, which is going to be favourable due to the corrupt nature of the suggested reviewer, the paper is accepted. At this point, the authorship list is amended, and the fee-paying consumer of the paper mill enterprise is added to the list of authors. Following this, the paper is published.

Lisa Parker presents on Paper Mills.

Lisa highlighted that there is a need for detectives / sleuths to spot paper mill activity, which could be a spike in similar publications on a similar topic, a series of papers with very similar titles, or evidence of image manipulation. A key concept here is the integrity squad. As Lisa explained, the “integrity squad supports and guides Editor / Editor-in-Chief investigation” and will additionally check authors’ other submissions and publications, and other papers reviewed by the same peer reviewers in an effort to identify paper mill activity.

A major issue is that, as Lisa explained, paper mills “adapt in response to publisher attempts to identify them”. Indeed, with GenAI and various Large Language Model (LLM) programs, such as ChatGPT, we envisage that paper mills will become increasingly more complex and difficult to identify. Lisa highlighted that there is a salient need for AI tools to assist publishers in the paper mill detection process.

Concerns with the Reproducibility of Research

The prestigious Steneck-Mayer lecture was presented by the pioneering Professor John Ioannidis (Stanford University) who has published extensively on evidence-based medicine and epidemiology. Professor Ioannidis put to the audience the bold assertion that most scientific research is non-reproducible (see his classic essay “Why Most Published Research Findings Are False”). There are multiple reasons for this, including suboptimal research practices and “power failure” due to small sample sizes that are insufficient for the generation of statistically significant results.

To highlight the issue, Professor Ioannidis highlighted some key features of “Small data” science. These are typically “small sample size studies” with a “solo, siloed investigator” and the research practice would include “cherry-picking of one/best hypothesis” in a “post-hoc” manner and often “no data sharing”.

In contrast, Professor Ioannidis also highlighted some key features of “Big data” science. These include “extremely large sample size” or even “overpowered studies”, but Professor Ioannidis was swift to point out that these big data studies also typically suffered from “cherry-picking of one/best hypothesis” in a post-hoc manner. Additional concerns with big data studies were “idiosyncratic statistical inference tools without consensus” and “data sharing without understanding what is shared”. Consequently, data sharing, in and of itself, is not a guarantee of reproducibility.

However, what is most worrying is what Professor Ioannidis refers to as “No data” science. Almost 10 years ago, Professor Ioannidis published a commentary entitled “Stealth Research: Is Biomedical Innovation Happening Outside the Peer-Reviewed Literature?” in JAMA which reported on “a privately held biotechnology company” that had developed “novel approaches for laboratory diagnostic testing”. What was most disconcerting is how well received the diagnostic testing had been by publishers in the business world. Indeed, as Professor Ioannidis explained in his commentary, the “novel approaches” as developed by this private company had “appeared in The Wall Street Journal, Business Insider, San Francisco Business Times, Fortune, Forbes, Medscape, and Silicon Valley Business Journal”. However, there were no reports of the diagnostic testing by this privately held biotechnology company in the peer-reviewed biomedical literature. And the raising of these concerns was a turning point in a story which we all now know the sad ending.

John Ioannidis explains the myriad issues around reproducible research.

This phenomenon of what Professor Ioannidis refers to as “No data” science underscores the salient need for reproducibility indicators, data standards, and above all else, trust and transparency in research practices that scientists and stakeholders can collectively use to ensure that research is truly reproducible.

The 9th World Congress on Research Integrity will take place in Vancouver in 2026.

References

Ioannidis JP. Stealth research: is biomedical innovation happening outside the peer-reviewed literature? JAMA. 2015 Feb 17;313(7):663-4. doi: 10.1001/jama.2014.17662.

Zauner H, Nogoy NA, Edmunds SC, Zhou H, Goodman L. Editorial: We need to talk about authorship. GigaScience. 2018 Dec 1;7(12). doi:10.1093/gigascience/giy122.

Ioannidis JP. Why most published research findings are false. PLoS Med. 2005 Aug;2(8):e124. doi:10.1371/journal.pmed.0020124.