Addressing the challenges of sharing computational workflows with Yevis. Q&A with Tazro Ohta.

At GigaScience we see more and more of our published papers use computational workflows, as they provides researchers easy access to high-quality analysis methods without the requirement of computational expertise. However, systems are needed to enable the sharing of these workflows in a reusable form. To fill that gap we have a recently published paper on Yevis, a system to build a workflow registry that automatically validates and tests published workflows. This new system particularly useful to those that want to share workflows but lack the specific technical expertise to build and maintain a workflow registry from scratch. Yevis was started by Hirotaka Suetake and Tazro Ohta at the Database Center for Life Science in Shizuoka Japan, Hiro being the main developer of the implementations and Tazro the lead of the project. Following our long running series of author Q&A’s (and videos) we present a Q&A and video abstract (see below) with Tazro where he gives some insight into archiving workflows and his new platform.

What are the challenges in sharing computational workflows, and how does Yevis help address these?

If you want just to make your workflows public, it won’t be a difficult task. Many public web services like GitHub can help you to upload your resources, and some are free of charge. Sharing your workflow has a huge potential to help researchers who want to perform similar analyses. However, published workflows may stop working for many reasons; for example due to an update in dependent software or an unexpected change to data it relies on. To keep your workflow usable, you need to maintain it. But this takes valuable cost and time. Therefore, we wanted to help researchers by providing a platform that automatically performs workflow testing.

Why should people wanting to share workflows use this platform? What are the advantages for users?

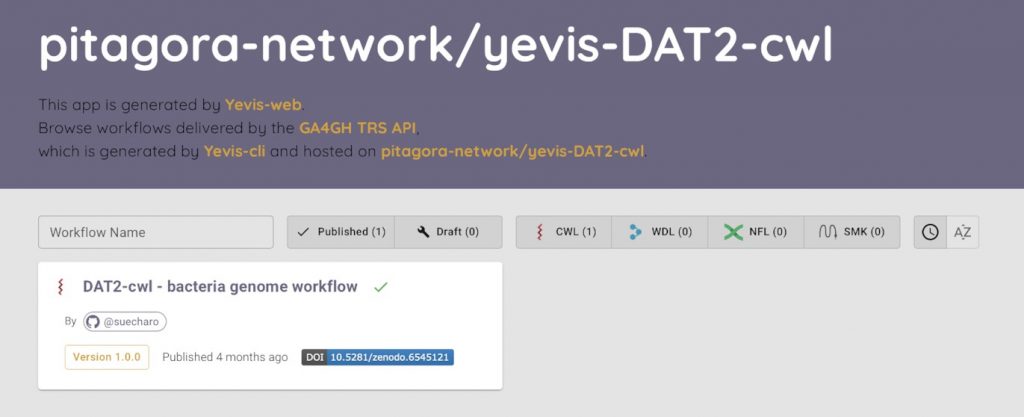

With Yevis, you can create your own workflow registry. The platform runs using GitHub and Zenodo, so you don’t need to have your own server. Yevis’s most significant feature is to help you maintain the uploaded workflows automatically. Once you register your workflow with your test data, the platform takes care of the test execution.

It would sound great to you if you ever had an experience in that someone told you that your published resources are not working anymore and you need to fix it. And this is absolutely why we started to do this.

Can you tell us what is the backstory on the name “Yevis”?

Naming a project or software has been the most time-consuming phase in my research. I was always trying to think of a name that is meaningful or full of humor as much as the other popular bioinformatics databases or software. Then one day I realized that this is just a waste of time – at least for me. I realized that this is something I have to automate to make my life easier. Then I invented a method to name a project, and the name ‘Yevis’ came from it. I hope I can publish the method one day. But you may notice something in common with the names of our projects.

What are your future development plans?

I believe the idea of automated workflow testing is beneficial to many, and our implementation is ready to use. My team is already using it for its own registry and it is working perfectly. However, I see some limitations in testing. For example, if a workflow requires a large volume of test data, it may fail because of the storage limit of GitHub. Designing feasible workflow testing with small datasets may also not be an easy task for users who don’t usually think about testing, which we want to help with by automation techniques. Therefore, I would like to work on a method to automate the testing phase in workflow development, which saves a lot of time to make researchers more productive. I believe it can also contribute to improving the reproducibility of science.

Workflows have been a long-running area of interest for the journal, and since our launch we have tried to keep on top of the start of the art in this area (e.g. see the previous workshops we have organised on the topic and our Galaxy series). On top of promoting best practice the use of registries like Yevis and workflowhub.eu, we encourage submissions covering this important area of computational research.

Further Reading

Suetake H et al., Workflow sharing with automated metadata validation and test execution to improve the reusability of published workflows, GigaScience, Volume 12, 2023, giad006, https://doi.org/10.1093/gigascience/giad006