The lowest common denominator: marketing science with jIF

Shallow Impact. Tis the season.

In case people didn’t know— the world of scientific publishing has seasons: There is the Inundation season, which starts in November as authors rush to submit their papers before the end of year. Then there is the Recovery season beginning in January as editors come back from holidays to tackle the glut. The season of Absence hits in mid-July, as all of Europe prepares to go on a month-long vacation— and every editor scrambles to find just one reviewer.

But the most magical season of all happens in June: the season of Journal Impact Factor (jIF). At this mystical time of year, every editor and publisher and their marketing team wonders Oh, what will The Web of Science bring us this year?

All joking aside, it is hard to ignore jIF because they have a huge impact on researchers’ careers and on journal success. Still, we all can’t help but notice that this all-powerful number is very much like the Wizard of Oz… what is really going on behind the curtain and should the wizard be invested with that much power?

The clear answer is, well, no.

Journal IF wasn’t developed to do what people now use it for. It doesn’t actually inform which scientists are doing good research and certainly doesn’t measure the long-term impact of findings. It is a number based on a single parameter. By focussing purely on short term citations it incentivises short term citations over everything else, leading to higher ranked journals publishing the most unreliable science and having the highest retraction rates. No one knows exactly how it is calculated. And it can shamelessly be manipulated by journals.

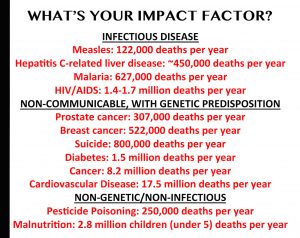

At the same time it takes a heavy toll on the length of time researchers take to share information to make sure they can publish in the highest IF journals. It can add up to years if they submit to one after another journal with a successively lower jIF to get into the highest jIF journal possible. Though, I seriously doubt the ~22,000 people dying from, for example, cancer every DAY, care where it is published.

At the same time it takes a heavy toll on the length of time researchers take to share information to make sure they can publish in the highest IF journals. It can add up to years if they submit to one after another journal with a successively lower jIF to get into the highest jIF journal possible. Though, I seriously doubt the ~22,000 people dying from, for example, cancer every DAY, care where it is published.

A fair amount of work has gone into creating new/modified metrics for measuring ‘true’ impact, but each have drawbacks and can also be manipulated. More, most focus on publication rather than including things like sharing information prior to publication, putting things in preprint servers, freely sharing data and source code, writing complete methods, collaborating, educating young researchers, reproducible research and more: you know, the things that actually promote rapid communication, learning best-practices, and broadly advancing science.

Being a journal published by a scientific institution in China, we see the glaring negative impact on research and scientific communication. Here jIF has been taken to an extreme. For example: many universities require that in order to graduate, students must publish in a journal with an IF of 2 or more. One-upsmanship has led to institutions now requiring publication in a journal with an IF of 6 or higher!

Worse is that the Chinese government formally acknowledges the importance of jIF with monetary rewards. This mechanism, of course, can only beget institutionalized ways to promote unethical behavior or out right fraud. When academic salaries can be proportionally low, this is potentially big money, with institutions such as Zhejiang University being open about author payments of over $30,000 for articles published in the top impact factor journals (anecdotal stories indicate the amount can be even higher).

So what is the ultimate cost of the impact factor? Well according to investigations from Guillaume Filion, about $10,000. At least for a ready-written publication in an impact factor 2 journal. And Mara Hvistendahl in Science uncovered a “publication bazaar” of companies adding last minute authorship to in-press papers in supposedly “high impact” journals. The issue of “paper mills ” ghost-writing publications to order, and guaranteeing publication in impact factor journals through peer-review fraud, is a dirty secret of the publishing industry. Carried out near-industrial scale from a number of companies in China, the several hundred papers highlighted in Retraction Watch for peer-review fraud is likely just the tip of the iceberg. We have written Op-eds about the same skewed incentive systems potentially spreading to Hong Kong, and have subsequently uncovered companies in Hong Kong offering similar ghost writing and “guaranteed jIF publication” services for even cheaper than those offered in mainland China.

While researchers in other countries can shake their heads in astonishment at these overt activities: is the push in these countries to be published in these same journals really, other than the formality, any different? And by its very obscurity, is it harder to root out. For example, you can’t see or quantitate where, or how often, a Dean of a department quietly suggests to their researchers to try to publish in higher jIF journals, or places where advisors tell students not to share information to make sure they can get published in high jIF places. Plus, it is hard to truly ignore jIF and reduce any inherent prejudice when jIFs are announced and published and highlighted everywhere— Coming to a billboard near you…

Okay— yes, jIF is bad when there is too much focus on it for researcher success, and its importance is hard to weed out. (Why it is bad has been written about ad nauseum too many times to reference: just Google journal impact factor bad.).

So, why am I writing about this?

Because hooray- GigaScience obtained its first impact factor last month: sigh of relief… but mostly a feeling of rage.

The entire process of jumping through hoops trying to get tracked leaves far more than just bad taste in one’s mouth. Knowing it matters when it shouldn’t; being told how many of certain article ‘types’ should be published; limiting reference numbers in certain article types because this might affect the denominator in the jIF ratio of citation to article; trying to estimate the jIF before it comes out and realizing how very non-transparent that calculation is; and telling people jIF isn’t a good metric when a journal doesn’t have one— then trumpeting it to the heavens once it does….

The discussion groups about the problems of jIF often indicate that the easiest way to reduce the jIF effect is by having funding agencies and research institutions stop including jIF in making financial decisions (and many have). However, this isn’t so simple: funding agencies, research institutions, and universities can use how often their researchers publish in high jIF journals as part of their fund raising and in increasing student applications… So… who really can we target as the main player to stop the cycle?

Obviously this needs to be multi-pronged, and this idea isn’t new, but it isn’t easy to implement. But one place that can, in one globally-agreed-on sweeping move, both reduce the ease of finding jIFs and make it less “in your face”, is by all publishers agreeing to remove this number from their websites, from their marketing flyers, from their emails.

Several publishers and journals (e.g., eLife and PLOS journals) do this, but, in just the three weeks since the latest jIFs came out, I have received a bonanza of myriad emails announcing: first jIFs; increased jIFs, established jIFs; and “here are all of our journals’ jIFs”. I almost feel that some deranged used-car salesman has taken up permanent residence in my inbox. But a real indication of how embedded the use of jIF has become in the publishing industry is that even though our publisher has firmly supported us when we requested that our jIF not be posted on our or their website, in marketing flyers, or in emails, it never-the-less appeared up front, top, and bolded in our most recent article alert e-mail. This was not because our publisher decided to ignore how seriously we felt about this; it was simply that the publisher’s auto-alert emails for journals have a standard template that includes jIFs for any journal that has one. No one sees these emails before they go out, and thus it was missed. That is how hard-wired jIF use has become in journal marketing: it is an auto-include.

Yes— I understand, the researchers want to know it, but we’re not using it to give researchers what they want: we’re using it to sell journals. Which is a shame, when we, like everyone else, know it does more harm than good.

Reducing the apparent importance of this number is something we as journals and publishers can actually do quite easily (once auto emails are updated, of course).

So, Springer-Nature BMC, while I thank you for being on board with our wanting to eliminate the use of jIF for our marketing— I implore you to remove jIF from every one of your journals’ websites, flyers, and emails.

At the very least, sign DORA (Declaration of Research Assessment) (http://www.ascb.org/dora) and follow their recommendations for reducing the use of jIF, Though, better would be to take a stand with the other publishers who have already done so and simply eliminate it completely.

I also appeal to other publishers to do the same. Editors, you can push this yourselves by telling your publisher you do not want it used for your journal. (And if you are thinking— but we need it for people to submit articles to us… then you are using it for marketing.)

Will this solve the jIF problem? No. But it is a straightforward, easy step to take (when willing). It will ultimately mean that those who really care about jIF will have to take that extra step to look it up (or pay Thomson Reuters). Making jIF less readily available will make it harder for it to be so easily used in inappropriate ways. Tell researchers what your journal does to improve scientific communication instead of using a one dimensional, non-transparent, readily gameable, two-years past indication as a stand-in that, more often than not, promotes bad scientific practices.

At the very least, it will reduce the sense that we are selling science.

Laurie Goodman

Editor in Chief, GigaScience

Recent comments

Comments are closed.

We have a long way to go, simply because there is money involved. Publishers’ marketing and antediluvian attitudes even in so-called enlightened institutions and countries result in the younger generation of scientists still being in thrall to impact factor. In most of the world, impact factor still decides financial reward, whether directly, as in China, or indirectly where it is factored into promotions.

Signing DORA is one thing, but we need more direct action. Senior scientists who have nothing to lose stopped reviewing for publishers who, regardless of their editorials, push impact factor. This would have an effect, if only because publishing would take much longer in these journals.

Given the sociological reality that Impact Factors (of some sort) are an inevitable part of modern academic production, rather than pretending that IFs are not happening (so researchers will simply go back to ISI directly), journal and publishers should come up with a genuine, solid alternative and publicize it.