GWAS Reloaded: extended Q&A with PLINK1.9 author Chris Chang

The software application PLINK is one of the most widely used tools in bioinformatics, particularly for genome-wide association studies (GWAS) that look at genetic variants in different individuals to see if any variant is associated with a trait. With the advent of the thousand dollar genome, the computational demands being made on such programs are exploding. To rise to this challenge, a group of authors at BGI, the NIH and Harvard Medical School have today in GigaScience published a second generation upgrade of PLINK, with 1000 fold speed increases in some of the features. Following the shorter Q&A in Biome, here we give a reloaded full-length box-set-completist-version of the interview with lead author Chris Chang (BGI). After a PhD at UCSD, his insights into software engineering and research have been honed working in such diverse areas as medical technology start-ups and games design, and he also has the dubious distinction of having been the lowest scorer on the worst-placing US International Mathematical Olympiad team in history.

The software application PLINK is one of the most widely used tools in bioinformatics, particularly for genome-wide association studies (GWAS) that look at genetic variants in different individuals to see if any variant is associated with a trait. With the advent of the thousand dollar genome, the computational demands being made on such programs are exploding. To rise to this challenge, a group of authors at BGI, the NIH and Harvard Medical School have today in GigaScience published a second generation upgrade of PLINK, with 1000 fold speed increases in some of the features. Following the shorter Q&A in Biome, here we give a reloaded full-length box-set-completist-version of the interview with lead author Chris Chang (BGI). After a PhD at UCSD, his insights into software engineering and research have been honed working in such diverse areas as medical technology start-ups and games design, and he also has the dubious distinction of having been the lowest scorer on the worst-placing US International Mathematical Olympiad team in history.

This work was carried out while you were working on the BGI Cognitive Genomics project looking at things like the genetics of cognitive ability and prosopagnosia (face blindness). How did you get involved in this initiative?

I’ve been interested in the genetics of cognition for about a decade. During that time, I was involved in many online discussions of the topic with my colleague Steve Hsu, and both of us looked forward to the research horizons that would be opened up by cheap and reliable sequencing. When he saw that BGI had started trying to investigate the genetics of intelligence, he figured that the time had come and decided he had to get involved; soon afterward he recruited James Lee and I to help out as well.

Why did you decide to update PLINK, and what did the previous version lack? Has this improved PLINK been useful for the project?

Some time later, we wanted to investigate a hypothesis about genetic distances and quantitative phenotypes, and our initial MATLAB scripts were annoyingly slow. I was assigned the task of writing a C/C++ program to speed up the computation, and after three months of work, I had a weighted distance calculator that I unimaginatively called “WDIST”, and it was good enough to address our computational bottleneck.

WDIST, like many other programs operating on genetic data, accepted PLINK-format files, as well as a small number of PLINK filtering flags (e.g. –maf, which excludes variants with very low minor allele frequency) that my colleagues asked for. And I soon discovered that PLINK could already compute unweighted genetic distance… but it took about 1000 times as long to do it. I had made a significant improvement to a small part of PLINK, even though that wasn’t my initial goal.

Naturally, I wondered whether I had just gotten lucky with the distance problem, or whether my algorithm and data structure choices enabled similarly impressive speedups across a wide range of PLINK functions. So I asked my NIH collaborators if they found any other PLINK commands to be annoyingly slow, and they mentioned LD pruning (Linkage disequilibrium based SNP pruning). And sure enough, the same type of bit-parallel code which sped up genetic distance computation by ~1000x was also good for a ~1000x LD pruning speedup, and it took only a few weeks to implement it. That’s when I decided to try my hand at rewriting the entire program.

I hasten to add that this isn’t because the first version was bad by any reasonable measure. Indeed, the source code for the first version is easier to read and modify, and I’m sure that played a major part in how the program grew to encompass so many functions in the first place. Premature optimization is, correctly, discouraged at every turn. It was also started in 2005, before it had become clear that Moore’s Law had ended for single cores, and before genetic datasets were very large. But things have changed a lot in the past 10 years, and given the continued widespread usage of PLINK after its function set remained unchanged for years, optimization is no longer premature.

As for other tools, there actually were a few programs floating around which used bitwise operators and popcount on PLINK-formatted data. But they only optimized a single function relevant to their own research, such as epistasis or permutation testing. (And usually, that’s the right thing for a researcher to do!) The improved PLINK has certainly been useful for our own research, but I’m not going to pretend that the large time investment in it is obviously justified for that reason alone.

You are now in BGI’s Complete Genomics subsidiary working on their bioinformatics pipelines and tools. Can you say what you are working on at the moment, and has any of the work on PLINK been relevant?

My day job now revolves around genome assembly. Part of the work involves generating VCF files that are readable by third-party tools, so my experience writing PLINK’s VCF input and output commands has definitely been relevant.

PLINK is such a popular tool for the GWAS community, with over 8,000 papers citing its use in Google Scholar. Why do you think it is so popular?

It’s popular because it was the first major bioinformatics program to use an efficient and simple 2-bit genotype encoding, and it provided a comprehensive and well-documented suite of data management and filtering commands to go with it. Once it had traction, there was no reason for other researchers working with hard genotype calls to reinvent the data format wheel. And there has been no way to get around the need for some of the basic data cleaning operations provided by PLINK.

A few programs, most notably bcftools, now provide similar functionality for VCF files; but VCF’s versatility is a double-edged sword, it’s substantially harder to develop good software for it. For many purposes, the PLINK binary file format is already good enough (or almost good enough; more on that in a minute), and researchers can get their job done faster by writing programs targeting it instead of VCF.

Why do you think nobody else had updated it in the 5 years since version 1.07? With its popularity, was it daunting at all to decide to re-work the code base?”

Keep in mind that that the original program took 4 years to write, and by the time I’m done I will have spent about 4 years on it as well. And it’s hard to get a grant for this sort of thing before you’re already half-done: until then, how is a fresh PhD supposed to convince people with money to give that the job is worth doing (what if some newer program makes PLINK obsolete before the update is finished?), and that they’re the right person for the job?

With that said, I’ve never been intimidated by the actual work. My background is in statistics and low-level programming. I was a professional operating system kernel developer in a previous life, and I’ve written things like an exact battle probability distribution calculator for a game (replacing someone else’s Monte Carlo simulation) and a binary file editor in assembly language for fun. There are very few people working in bioinformatics with a similar profile. I couldn’t rule out the possibility that some team elsewhere in the world would do an even better job than me, but as soon as I achieved that ~1000x LD pruning speedup and established that the ~1000x unweighted distance speedup wasn’t an isolated fluke, I knew that my work was likely to be broadly useful.

How did the NIH-NIDDK collaborators participate, and how helpful was (original lead author of PLINK) Shaun Purcell in the process?

As mentioned above, the original program core was written to help my NIH-NIDDK collaborators perform a few expensive computations on their PLINK-formatted data; and they supported me in expanding it into a full-scale PLINK update from the beginning. In particular, they performed a lot of thankless software testing, getting the program to a state where it could be released into the wild without crippling itself with a reputation of being too buggy to be worth the trouble (though there certainly were quite a few rough edges; my fault!).

Shaun Purcell’s main contribution was, of course, doing such a good job designing the original PLINK that I could leave its interface practically unchanged and focus almost entirely on optimization. He also made the crucial decision to officially endorse my update and make the program’s web-check message, his website, and his mailing list point to my work.

Working lat(t)e

Given that you worked much of the time between BGI Shenzhen and Hong Kong without a dedicated office at either, where did you do most of the coding?

Coffee shops. (And now that I’m in Mountain View, I can frequently be found working on PLINK at Red Rock Coffee.) They’re not for everyone, but I’m able to get a lot of work done in that environment.

What are the key features and improvements in your new version of PLINK?

The most important improvement is increased speed; runs that formerly were overnight jobs may now take just a few minutes, and this allows bioinformaticians to learn more quickly and get more done. The largest contributor to this is my avoidance of expensive “unpacking” and “packing” of binary data; instead I take every opportunity I see to perform computation directly on the packed data. In the same amount of time it takes to “unpack” and process a single genotype call, it’s frequently possible to process tens or even hundreds of packed genotype calls if you know the right tricks. I also copied a bunch of new algorithms, developed a few of my own (the fast Fisher’s Exact Test will probably prove to be the most important among them), and added a significant amount of multithreading.

The lower memory requirement is also key. Most of the time it’s irrelevant, but when it matters it REALLY matters; you no longer need to wait days or weeks for access to a bigger machine to do your job.

Efficient VCF import/export also gets rid of a silly but ubiquitous pain point.

Was it difficult to persuade users to switch to the new version?

I don’t entirely know the answer to this question. I only see the users who have joined the Google group; the number of potential users who tried the program out and decided it wasn’t ready for prime time, or who are using it but didn’t join the Google group, I don’t know. I.e. I know part of the numerator, but I don’t know the denominator.

But I do have enough testers to get the program to the quality level this program deserves.

You call this PLINK 1.9, why not version 2? What are your future plans for PLINK?

I mentioned above that PLINK’s binary file format is sometimes “almost good enough” for statistical and population genetics work in 2015.

Unfortunately, almost good enough is, well, not good enough. As we sequence more genomes, we’ll have more uncertain genotype calls that shouldn’t be treated as either a hard call or missing data, we’ll have better phasing ability, and we’ll observe more triallelic variants that are scientifically or clinically relevant. Today, VCF is the only reasonable option for tracking these types of information; but it’s overkill. There’s considerable demand for a simpler (but not too simple) format that can be processed more efficiently using less code, and I will try to deliver that with PLINK 2.0.

I may fail; this is a more radical change than anything I’ve introduced in PLINK 1.9. In that event, everyone can just pretend 2.0, like The Matrix Revolutions, never happened.

All the code has been released in GitHub under an Open Source GPLv3 License, and before submission you made it available in a pre-print server. How has this open and transparent way of working helped the development of the tool and writing of the paper? Did you receive much useful feedback? Working on your own a lot of the time, how have you managed to test the tool adequately?

Well, first of all, I wouldn’t be allowed to release this tool at all if I rejected GPL, since the original PLINK was released under GPL, and even if I gave my program a different name, there’s no question that I’ve created a closely derived work! And I like this “pay it forward” aspect of the GPL; I think it’s a force for equality of opportunity.

Open source also helps solve the international trust problem. How can an American researcher trust a program written by a Chinese employee of a China-based company with their potentially sensitive data? Well, with open source that you compile from scratch, you know that if any sort of funny business is going on, it has to be driven by code that anyone can inspect for themselves, and I can be sent to prison if anything is found in such an inspection. This is far better than the situation with hardware, where secret backdoors and the like are almost the rule rather than the exception today. I’m pretty sure open source allowed me to gain the trust of more external testers, and that freed me up to spend more time on coding.

In addition, several testers have fixed bugs on their own; Masahiro Kanai (Keio University) has been especially noteworthy in this regard.

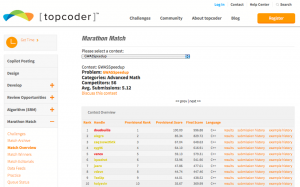

One of the new features came from the winning submission to the TopCoder GWAS Speedup logistic regression crowdsourcing contest. Was this a lucky find, or do you think open innovation and crowdsourced competitions and challenges will likely play a big role in driving innovation in the future?

Future of topcoder: will coding contests be dancing in the moonlight?

I think this type of contest will occupy a solid niche in the future, as researchers get a better handle on what types of problems are best addressed with crowdsourcing. Logistic regression was definitely a good choice: I had no experience in the area, and was not able to achieve more than a ~3x speedup on my own, while the contest winner achieved almost 50x.